Another Cadence Conference-A Big Headache

I had the hugest of headaches. I needed something to dull the pain so I could keep working.

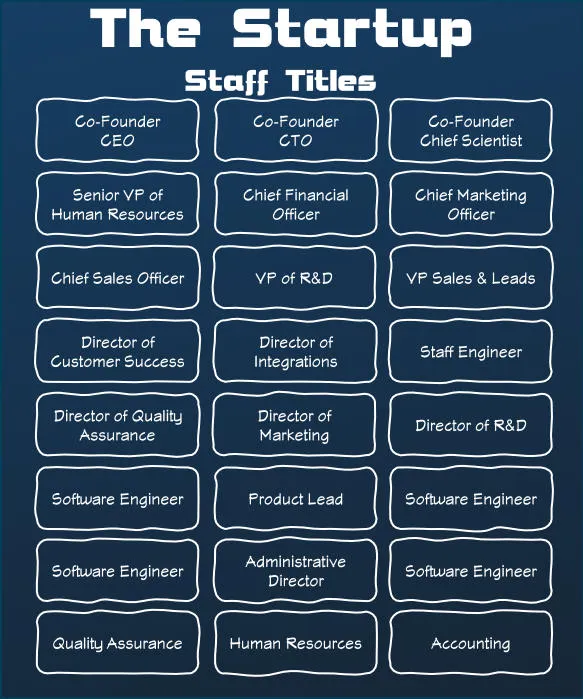

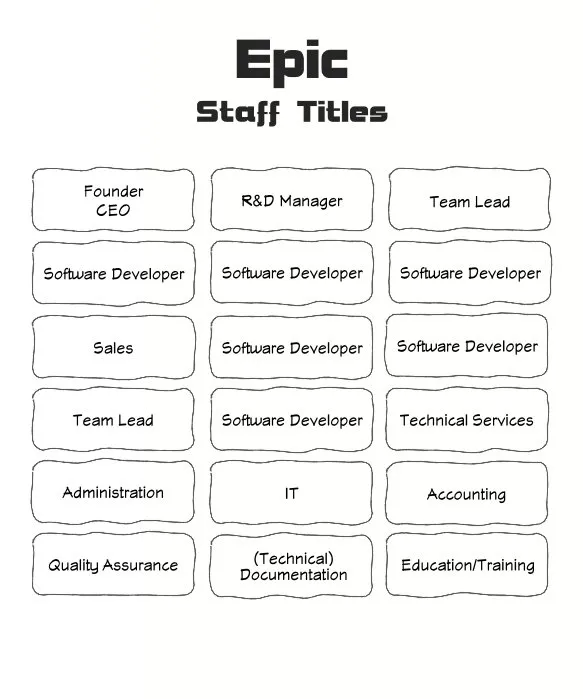

I’d been staffing the Epic “booth” at a conference in 1995 or early 1996. I’m embarrassed to say I can’t figure out what conference it was though! It was much larger than my TEPR experience. I found a “history of HIMSS” document and confirmed it wasn’t a HIMSS conference. In any case, I was there to answer technical questions about Cadence / Epic and deflect non-customers from the booth. There were a lot more Epic staff on hand — it was an important conference.

We were staying at the Marriott Gaylord Opryland Resort & Convention Center in Nashville, Tennessee (USA). It is absolutely bonkers of a hotel and conference center. Take ALL of the ideas you might have about what you’d want in a hotel experience and that idea was likely manifested somewhere in the facility, if you could find it. I’ve stayed in hundreds of hotels (from theme parks to Vegas to a former castle in Ireland) and never stayed at somewhere that had so many things going on — it wasn’t relaxing — think MORE COWBELL.

From the Opryland Hotel web site:

“Inside our airy, nine-acre glass atriums inspired by the vibrant energy and charm of the region, you’ll find winding waterways, lush gardens, and an enchanting atmosphere. Wander through the tranquil paths, find a quiet spot to chat, or simply relax and enjoy the perfect 72-degree temperature.”

If you’ve been on business trips, especially Epic trips “back in the day”, you’d visit cities by arriving late at night, do a thing for 12-16 hours, sleep and then wake up for an early morning flight. That was my first trip to Nashville. There probably was a city, but I arrived at night and left before dawn two days later. I only went outside to get from the airport to the hotel. One of THOSE trips.

There were two things I remember from the event. First, being a booth guard. There were thousands of attendees at this conference (still frustrated I can’t figure out what the name was!!!). There were many competitors and consultants that would attempt to watch demos or ask questions about our software. On more than a few occasions I had to Midwest Nicely ask someone to leave the booth.

The most frustrating one was a former Epic employee that had become a consultant for a competitor. He kept coming back to the booth and trying different avenues to learn about software release dates, etc. Under the guise of “friendly ex-employee asking innocent questions” he tried and tried. A little part of me felt bad for him that he’d decided to do that. But, a bigger part was surprised that he thought we were gullable enough to fall for it. There are often “pre-huddles” either at Epic or before the conference floor opens to visitors where last minute topics are discussed. He’d been a topic of both meetings as a warning.

I’m sincerely glad my career hasn’t required me to be on the opposite end of that.

In the middle of the afternoon on day 1, my brain hurt. I couldn’t concentrate and desperately needed some medication for a pounding headache. The cold and dry conference center air with the noises, the crowds, dehydration, talking to people … HEADACHE. I’d finally decided to try to find my hotel room (which was an adventure itself) and get something for my headache.

As I was just about to leave the main exhibitor hall, Judy saw me and asked how I was doing. Yes, she knew my name. 😀

I told her it was going well other than the pounding headache I’d developed.

She said “hold on!” and began to shuffle through her handbag/purse. A moment later, she offered me two ibuprofen/aspirin/what-evers. 99% of the time I would have declined that offer from nearly anyone (as I’m a no-double dipper, wash your hands, germ-a-phobe), but the gesture was so unexpected and my head so pounding I accepted them graciously and eagerly and used what little water I had left in a water bottle to swallow them immediately. I of course said thank you and I don’t think we chatted further.

Your two take-aways from this post:

- Don’t be the person who tries to get ex-coworkers and friends to tell you secrets

- Judy gave me drugs (yes, OTC drugs, but still).

If anyone knows what conference this would have been, I’d appreciate knowing and will happily update this post with information.

Hi! Before you go...🙏

I really appreciate you stopping by and reading my blog!

You might not know that each Epic blog post takes me several hours to write and edit.

If you could help me by using my Amazon affiliate links, it would further encourage me to write these stories for you (and help justify the time spent). As always, the links don't add cost to the purchase you're making, I'll just get a little something from Amazon as a thanks.

I'll occasionally write a blog post with a recommendation and I've also added a page dedicated to some of my more well-liked things. While you can buy something I've recommended, you can also just jump to Amazon and make a purchase. Thanks again!