There was more in the field than just cows and grass

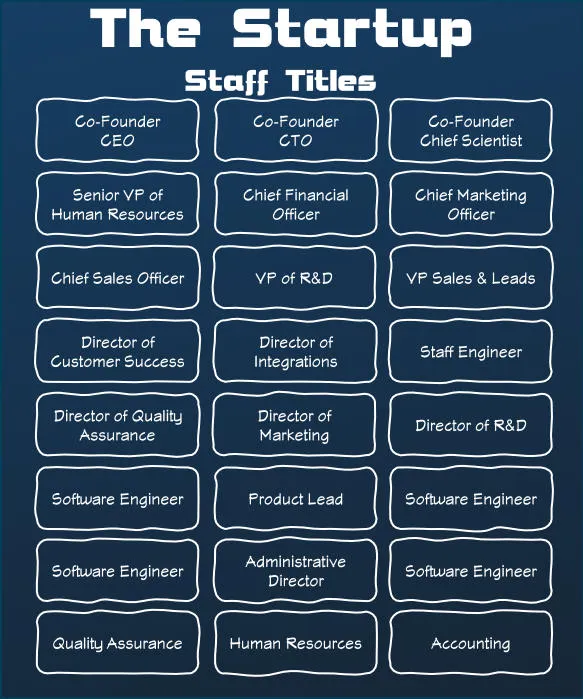

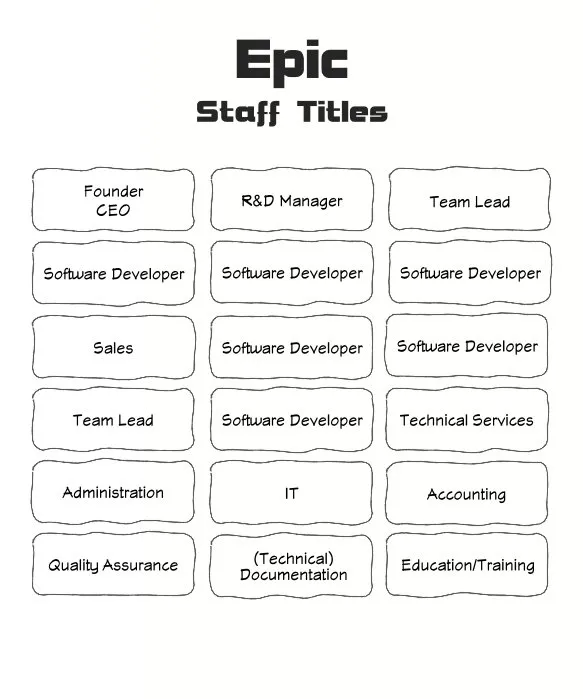

Overstaffed with newly hired software developers on Cadence, the small Cadence GUI team had to adjust to the economies of doing Epic business.

The Cadence development team had approximately doubled in size and simply did not have enough experienced developers to maintain a high degree of software quality. In fact, the quality had noticeably dropped. New code. New bugs. It was a runaway train … of bugs (yuck!). Of course, it wasn’t really the new developers’ fault — they were new and the code base was already quite large and very complex in many functional areas. The environment was stacked against their success unfortunately.

Using a Midwest cow 🐄 analogy as is common with Epic — the area that was fenced off for Cadence had unfortunately a massive number of cow-pies you could step in. (Cow pies = 💩 for those not in the know.)

There wasn’t much documentation to help them either. The code was … shudders … the documentation. It was a ruthless enemy of knowledge. MUMPS code can be a real chore to follow and debug, especially with the ability to extend workflows with customer driven code extensions (we called them “programming points” for many years). Cadence used these extensions a lot in some workflows.

Programming Points used in Chronicles Screen Paint added a whole new level of “whaaaa???” to projects.

An basic example of these programming points in use could be when an appointment was scheduled. When that happened, custom code, either written by Epic for a customer, or by the customer directly, would be executed. An appointment might trigger an interface message to another software system or print a label, or … Whatever was wanted! It literally was just a placeholder for “execute anything.” There are better design patterns for creating functionality like this today, but the Epic solution was functional and worked within the MUMPS environment. The often frustrating part of the programming points was understanding what their side effects might be and what expectations programming point code might have. They weren’t documented well. To be clear, the level of documentation these programming points received was often better than what other external systems and products were doing at the time (which was generally little to nothing), but we hadn’t delivered something remarkably better either. It would have great if more boundaries had been clarified for sure.

To further add complexity to these programming points, it was uncommon for Epic to have access to the code used at customers (and especially in a live/demo/testing environment).

While there were a number of starter projects for the new hires, each project required more attention for design, programmer review, and testing than they had been getting. The team had experienced developers and were generally very trusted to commit good code, so code reviews and testing were light generally. But, the new round of hires changed everything.

A choice was made in a meeting I wasn’t invited to attend. I would have needed my perfect poker face at that meeting to not express my true feelings. It’s better that I hadn’t attended as I doubt I could have maintained a “rah rah” attitude.

In this meeting, it was decided that Cadence GUI software developers would spend no more than a 8-10 hours a week on GUI and the rest was devoted to code review and testing. Ugh!!!

While I absolutely understand it was a necessary outcome as a positive Epic business decision, the impact on my personal happiness was profoundly negative. From daily new challenges of building Cadence GUI to a slog of reading MUMPS code and trying to interpret not only code that was often new to me, but trying to understand whether the code was the right choice was draining. Day after day after day.

And day after day. After day.

As has been a common Epic theme over the decades, Epic had committed a lot of functionality for sales and customers and the results was a massive backlog of development that was contractually committed for the next release cycle. While I’m sure the senior developers could have completed the work faster and with fewer issues, it would have been a terrible disservice to the new hires and our future selves. We threw them into the fire. This was a period were we had few fire extinguishers as well. Many fires needed extinguishing. We needed the new hires to learn how to prevent code fires earlier and that required us to adjust.

The days continued. This period went on for about 3-4 months before I’d reached my breaking point.

You’re probably thinking that I was impatient. Yes, about many things I’m terribly impatient (don’t ask my wife!!!). When my mind goes into boredom mode, my enthusiasm shuts down. My itch for creative outlets becomes the focus of any idle time (and often distracts me from the task at hand). Being allowed about a day a week to work on Cadence GUI was a tease in many ways. Little got done as it was difficult to start and stop something that was so fundamentally different from the quality assurance work. Context switch. Context switch. GUI! Context switch. I’m sure my hours worked went UP during this period so I could work more on the thing that was excited about (sad, but true).

I’ll be interested to hear what others think — is the ability to shift resources for short term crises like this a strength or a weakness of Epic culture? The number of times that something comes up and a human is tasked at Epic to do something else for some period is uncountable. Emergencies — sure. But, what about when the reason is poor planning?

I could draw a lot of squiggly lines on a whiteboard that would eventually connect to demonstrate why I found myself back in Carl’s office at the end of this period asking for a new team or project. It turned out — there was a need elsewhere as EpicCare Ambulatory had outgrown Visual Basic 3 and Windows capabilities, so there was work that needed to be done. They had dug themselves a large hole with their design and implementation that ran into unbreakable limits within Windows 3.11 and Windows 95.

But, that’s a story for next time.

Thanks if you’ve subscribed to my newsletter! Every subscriber helps me know that you find this content interesting. Please subscribe if you haven’t already!

Hi! Before you go...🙏

I really appreciate you stopping by and reading my blog!

You might not know that each Epic blog post takes me several hours to write and edit.

If you could help me by using my Amazon affiliate links, it would further encourage me to write these stories for you (and help justify the time spent). As always, the links don't add cost to the purchase you're making, I'll just get a little something from Amazon as a thanks.

I'll occasionally write a blog post with a recommendation and I've also added a page dedicated to some of my more well-liked things. While you can buy something I've recommended, you can also just jump to Amazon and make a purchase. Thanks again!