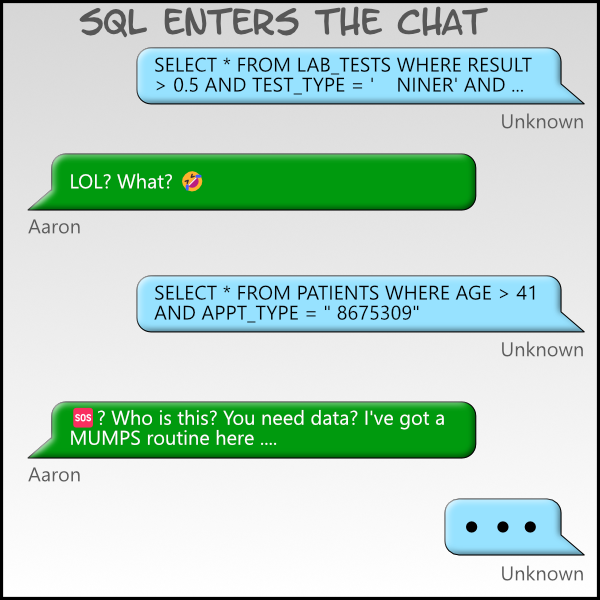

SQL Enters the Epic Chat

Happy 30th birthday to SQL at Epic (1994-2024)!

Did you know that SQL wasn’t always available at Epic? And that before Clarity … there was a way to use SQL against Chronicles databases? I know! It’s wild! In the time before SQL there was only Search and Report Generator (I shudder from needing to use those very often).

The specific start date of the project is fuzzy to me, but I was asked to assist with the effort of embedding a SQL solution while still working on the Cohort Lab product team. Even back in late 1993 and 1994 Epic was hearing from potential new customers that the lack of an industry standard query language for accessing Epic data could be a sales challenge. They wanted a better way to get access to their data; their requests were very reasonable.

The Epic built-in tools were a hard sell. With the right Epic staff and wizardry, a lot could be done with the tools, but wielding these tools required great patience and knowledge. My TL could easily shame me with her knowledge and skills at the time. She could unlock the doors and breeze through the data. Me, on the other hand would walk straight into the closed doors routinely, stumbling around in the darkness. The experience of editing and creation of reports was also …, well, cumbersome. The workflows were all prompt and menu driven and it was very easy to rapidly become lost in the experience. It never clicked for me.

The Epic Foundations team (maintainers of Chronicles and other lower level utilities at the time) was tasked to enable SQL for accessing Chronicles data (I think it was just one person working on this task full time). If you were to sit down and design a proprietary database that was generally optimized for healthcare datasets and then try to layer on a standards-based structured query language on top of that proprietary database, you’d likely decide that the two cannot be combined effectively and other access mechanisms would be reasonable (create an API I hear you say!). But, in the 1990s, that wasn’t a thing and just meant more programming and required customers to have skills they shouldn’t have needed to have. Epic software was being sold into organizations where Epic was just a piece of the software IT puzzle and was not the massive “Solution” with so many systems as it has today. It wasn’t The Enterprise, just Enterprise-ready.

Epic’s software needed to integrate. Data needed to be combined with other data. IT staff didn’t have time to learn another reporting tool, extraction layer, etc. Further, there were admittedly quite a few limits with data reporting back then that made gathering data from multiple Epic databases (AKA table groups) perplexing and complex. A SQL solution would enable a whole new world of capabilities for customers and Epic developers.

Here’s the rub though: Chronicles doesn’t map well to “tables” like you’d find in a traditional relational database. In fact, it’s not a natural fit at all. If you’re an (ex)Epic employee reading this — yes yes yes. It isn’t too terrible the way it’s all mapped now. But, getting there wasn’t so straightforward.

One of the early decisions was to buy a license for a MUMPS based software package from a very tiny software company (I think it was just one full-time guy, Dave Middleton, the owner and maybe a part timer?). The product was called KB-SQL. It seems that in 2022 the company was acquired and the product still exists Knowledge Based Systems.

I know the initial price of the Epic part of the license was more than a typical software developer yearly salary at Epic. That was HUGE, especially since it was such a small part of the overall Epic system. And, Epic is very OMG frugal when it comes to software spending.Each customer then had to pay for “per-user” licenses to run it on their systems.

KB-SQL was a very interesting solution and setup. It had two things that made it very “modern” at the time — a full screen text editor (EZQ) for writing and running queries and an extension mechanism for connecting the editor and query builder/runtime to an arbitrary data source. Even with that extensibility we still needed to actually map the Chronicles data to tables/schemas. We had a LOT of meetings diving into the way Chronicles worked and the way KB-SQL worked. The combination forced some interesting limitations that we had to design around. Dave made changes to accommodate Epic’s requirements when practical. We wrote a lot of experimental queries to test the design.

I remember table and column names had to be far fewer characters than we wanted (12 I think?). I kept writing and adjusting tools to run through all of the Epic built Chronicles databases at the time to test our naming conventions (and to provide a way for us to apply the names automatically as much as possible). I’d print out the results and we’d often go, “bleh, that’s awful.” It took some time and many tables needed some adjusting. The final tool I’d written for this project had a list of common abbreviations we needed to apply and Epic naming conventions so that it could shorten names as much as possible while feeling like the names came from the same company rather than teams deciding on their own patterns.

We created new Chronicles items to store the metadata needed by the KB-SQL engine. Many lines of code were written to convert the queries into compiled MUMPS code (all behind the scenes). The compiler along with KB-SQL tooling had deep knowledge of the Chronicles database and could produce a best-case MUMPS routine for a single query. The temporary routines were not meant for human consumption. They stored temporary results in intentionally obscure/unused globals, did direct global access when possible (although the push to using APIs as mentioned previously made this more challenging).

By doing execution this way, it provided the best runtime experience for the query author. Generating the code wasn’t slow, so the step seemed very reasonable. That choice did mean that moving a query to another Epic environment as part of an installation for example would trigger a build at that time. There was no Epic branding or wrapper placed around the KB-SQL editor experience. For 1994, it was slick.

We decided on naming conventions so that the types of tables and data was more obvious from the name. For example, because some data is time oriented, those tables needed the concept of a timestamp. If you were using a timestamped table row, you needed to absolutely not forget to use it in the query or the results could be wrong and LONG! Some tables were no more than a category (pick) list (TEST_TYPES_CAT). We added short postfix notation to most tables which was annoying, but without these, it was very very difficult to understand what was what (there was no EZQ-intellisense in the code editor!). Common columns in a database were named based on the database when possible, PATIENT_ID. Each database mapped to potentially dozens and dozens of tables, so having a consistent convention helped tremendously when building queries. Following a long standing tradition at Epic, temporary queries were prefixed with X_{Initials}_{Name} with each Epic product team having a prefix letter reserved for standard queries that were shipped with Epic software.

Locating an item wasn’t as easy at the time as would have liked. If you had a specific Chronicles item you wanted, you needed to either remember where it was or consult the Chronicles Dictionary. It wasn’t hard to do, but it wasn’t the ideal user experience. We produced documentation with the details for external and internal use although it wasn’t satisfying.

We automated as much as we could for application teams. I don’t recall honestly anyone particularly enthused about the new SQL support directly. Unfortunately, it was a “one-more-thing” to worry about, learn, test, etc. Maybe because I was too close to the project, I was the opposite. I wanted to push this thing to its limits and beyond. I frequently did (and broke things in spectacular ways). In making and testing lots of reports for Cohort Lab though, it became very evident that SQL alone wouldn’t be enough to produce the best reports. KB-SQL had what I’m going to call “user-defined-functions”. These were MUMPS code based, but wrapped up into KB-SQL in such a way that a developer could use them to enhance both the query but also the column output. I made miracles happen with the data (miracles may be a stretch, but they were super useful and really tricked out the system — some were moved into standard code and shipped with all Epic products). Whereas Chronicles Report Generator and its ad-hoc search capabilities built into Chronicles always left me wanting, the SQL support gave me reporting super-powers. Writing queries that used multiple databases was no longer a technical hurdle I needed to jump and stumble over, it was a few queries away using a standard language tool. When it fell short, UDFs to the rescue!

Because of the way Chronicles structures data, building code that traversed the code most efficiently required some adjustments and new size-calculations to be stored (like how many keys were in an index for example). Selecting the best index needed to be scored against other potential options. I haven’t added this to my LinkedIn profile, but I know I wrote my fair share of cartesian product joins too, especially at the beginning. My skills at quickly killing the right process on the shared server grew day by day.

We also added enhancements so that doing reports to screen or a file used Epic’s standard systems (which in turn unlocked some improvements to the way device output selection was done for the better).

For me, the most amazing feature that all of this work eventually unlocked was that there were database connectors available for Windows! Using a then modern tool or programming language that supported the KB-SQL driver, I could access Chronicles data directly without a terminal or Epic application! It seems so ho-hum these days, but in 1994, that was big. It provided a level of data access that Epic couldn’t do otherwise without custom coding.

It was a fun and important project for Epic to work on and I’m glad I was along for the ride. I don’t know the specific date when a customer had production access to KB-SQL and Epic, but it was sometime 1994 (I’ve got a post in mind to talk about the release schedules back then).

Dave probably grew weary of all of our requests and dreams and wants back then, but he was great to work with all along the way. I see that he retired in August 2023 — so congratulations to him!

Hi! Before you go...🙏

I really appreciate you stopping by and reading my blog!

You might not know that each Epic blog post takes me several hours to write and edit.

If you could help me by using my Amazon affiliate links, it would further encourage me to write these stories for you (and help justify the time spent). As always, the links don't add cost to the purchase you're making, I'll just get a little something from Amazon as a thanks.

I'll occasionally write a blog post with a recommendation and I've also added a page dedicated to some of my more well-liked things. While you can buy something I've recommended, you can also just jump to Amazon and make a purchase. Thanks again!