The App is Too Fast!

We’d just finished a review session of the latest development of Cadence GUI with Judy. The feedback was generally positive except for one thing:

When switching between future and past appointments: “Slow that down somehow to make it more obvious what’s happening.”

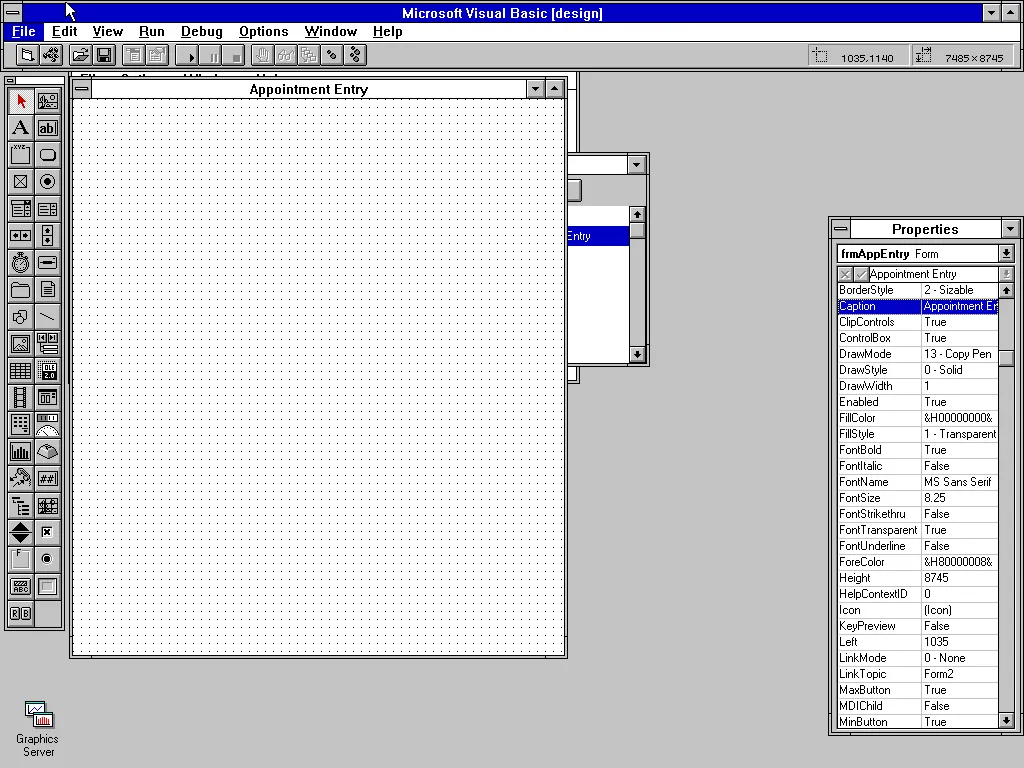

We’d just spent a month adding more features to the new graphical user interface for Cadence, Epic’s outpatient patient scheduling application. One of the goals we’d set for the project was that a workflow would be as fast as the existing terminal application. Sincerely, that was a lofty goal in many ways, especially as we were in very uncharted territory and also wanted to add a number of often requested features to common workflows.

Of course, the existing terminal-based application experience couldn’t be modified to make Cadence GUI work (especially workflows or speed). There were a lot of developer hours put into to how to maintain these two distinct applications that needed to share a common code. If you’ve ever worked on a terminal/console application that has input and output, it may not surprise you that these types of operations are …, frankly, everywhere. No architectural dig was necessary to find that IO code was spread around the code as we began to adapt code and was so frustrating. It wasn’t a bug either — it was just the way code was written then. Sometimes we’d find them early in development and sometimes the elusive buggers would be uncovered in a QA pass (obscure configurations often aided in their discovery). It would have been an unnecessary abstraction to build an application in the late 1980s that could have IO that was directed at anything but a terminal. The application performance would have suffered for zero gain for the user experience. MUMPS code needed to be tight and efficient.

I’m planning a blog post specifically about some of the challenges we faced regarding this type of work and the communication channel, so, I’ll skip ahead for now.

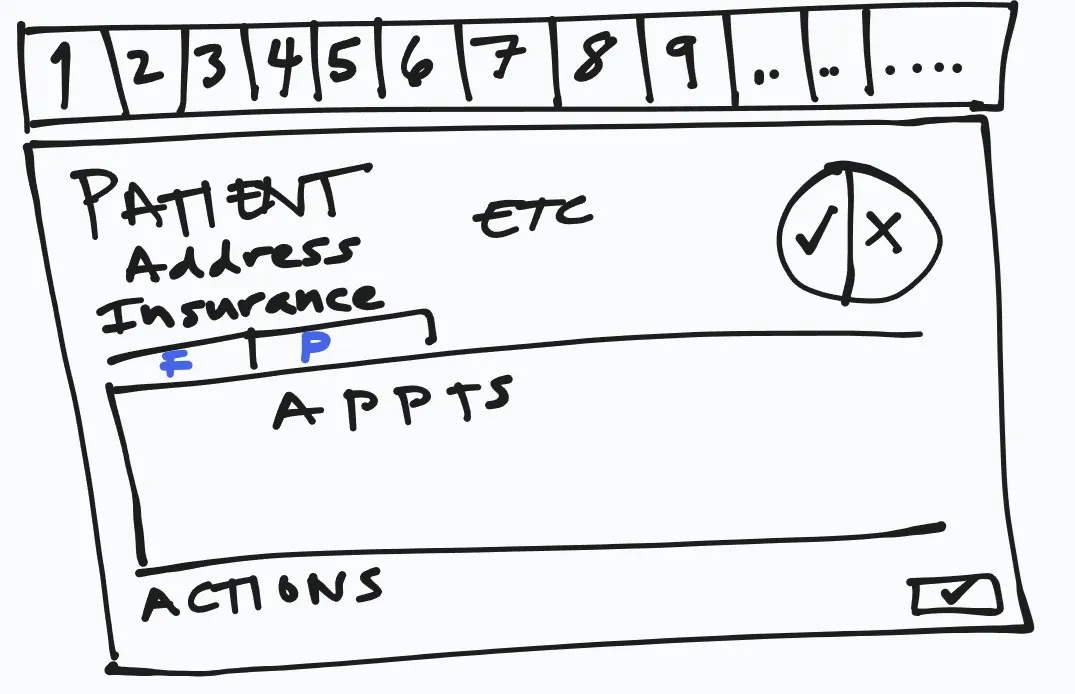

One of the first screens we wanted to show was a patient summary view. The screen would show a summary of the patient demographics, DNK appointment statistics and upcoming appointments. It would serve as a “patient dashboard” and launching point to other application functionality. This experience was not similar to the one that the EpicCare team had been building. While we shared some general UI patterns (big row of buttons floating at the top of the screen), they’d made some choices that wouldn’t work well for a Cadence user (unsurprisingly, EpicCare Ambulatory needed to make quite a few design and architectural changes years later to accommodate improved workflows and capabilities). Specifically in this case (and in contrast to EpicCare at the time), a Cadence scheduler might need to open more than one patient at a time.

The app workflow to patient selection and launching the review screen was snappy. In a head-down side by side comparison, the terminal UI was faster, but it wasn’t offering as much utility. The additional features were ones that were requested, but not available directly and consistently in the terminal app. We were happy with the results.

While obviously nervous about the demonstration to Judy we were reasonably confident that it would show well.

And it did go well, except for feedback about switching between future and past appointments.

It wasn’t a lot of data and the request to fetch past appointments was quick. When the Cadence scheduler would click on the “past” appointments tab/label, the new list would pop in what seemed like instantly (for back then — it was 1994, so the common experience was that things would be a bit sluggish).

“Can you slow that down?” — Judy

The team lead wisely, after a few rounds of “huh?”, said we’d look into some options.

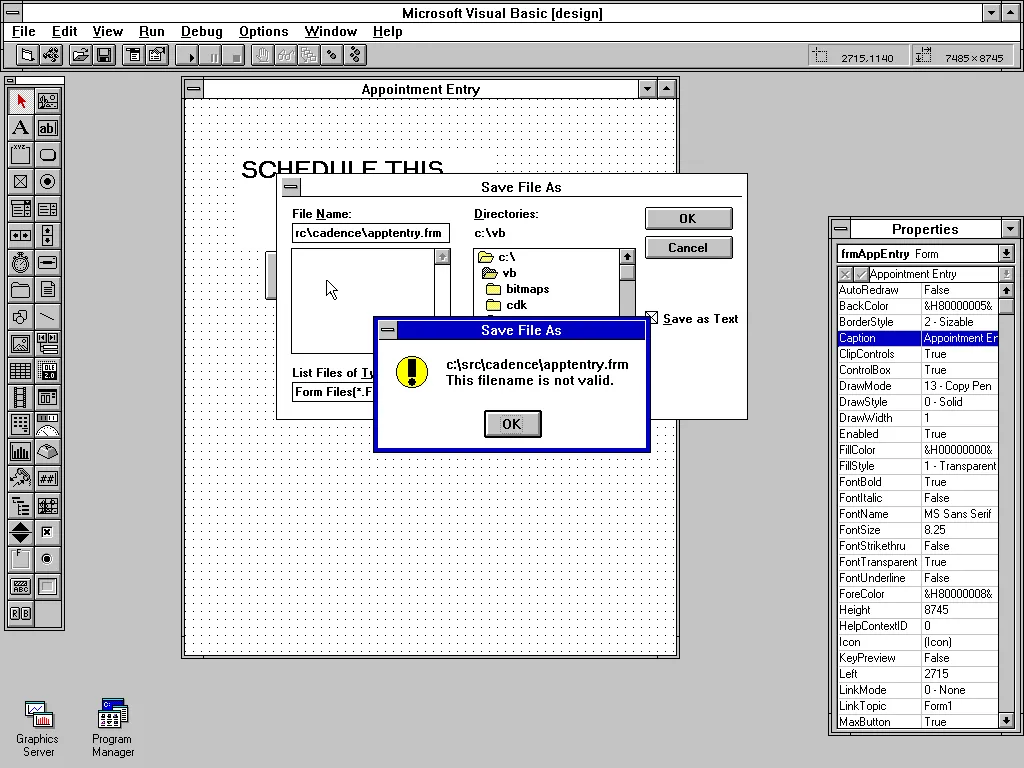

If you weren’t doing application development for Windows 3.11, you may have already “solved” the problem we had with many modern solutions.

- Animation

- Colors or Opacity

- Fonts

- Layout changes

Windows 3.11 Development Issues

Here’s what wasn’t available to us:

- We had 16 colors available generally, or a dithered 256. Opacity was either

100%or0%. - There was no animation framework (and frankly at the FPS of a common computer back then, it would have been annoying)

- We were limited to the fonts installed on the OS — and those weren’t many. The standard font used in VB at the time was MS Sans. It was perfectly ordinary. Sometimes we used bold. Other times, not. Purely using it as an indicator wasn’t great.

The Limitless Color Palette

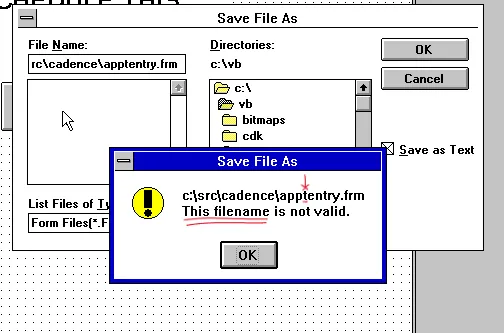

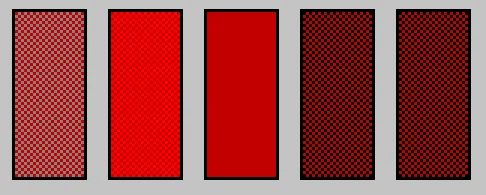

Let me zoom that for you. It may not be obvious yet though …

That may have not been enough, so one last time:

As you can see, all but the middle red color are dithered. While we did use dithered colors occasionally, we did try to avoid situations where they were used with text on top as it was too hard to read. Dithered colors looked … odd … generally.

We essentially had 16 pre-chosen by Microsoft solid colors to use where we could be assured they’d look OK to most folks.

Animation

Visual Basic 3.0 was a single threaded application. If the application was animating, it wasn’t doing other things for the user. There wasn’t a graphic processor that was able to offload animations … there just wasn’t a good way to do animations that were effective.

Solutioning…

We tried quite a few different things before the next demonstration with Judy. At the time, there was a silly way to animate a GIF file — but it was all on the main application thread. For a brief period, we had a a build of Cadence that would show a silly little dancing bear that would pop-up and dance when switching between future and past appointments.

Needless to say, that wasn’t an option. I recall us floating the idea to her (with something other than a bear!). But, we didn’t like it either because while it was animating, the application was blocked. That wasn’t a great way to make the application “as fast” as Cadence text/terminal.

Solved with this one Hack

Oh, my head hurts that this was the primary solution that we used for quite a while to satisfy the issue:

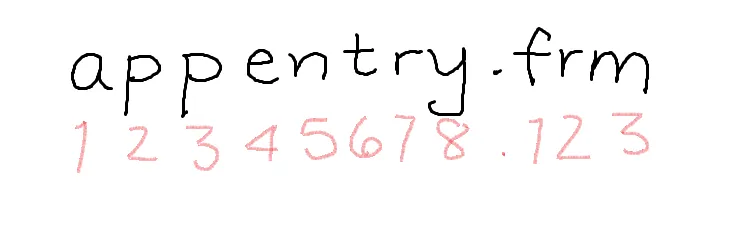

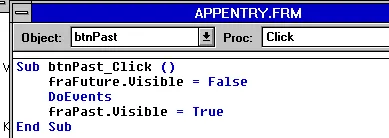

Sub btnPast_Click()

fraFuture.visible = True

DoEvents

fraPast.visible = False

End SubDoEvents. A necessary evil in many Visual Basic applications over the decades. When something didn’t quite work as expected, developers would often turn to DoEvents as a solution without fully appreciating that there were grave risks to its use. The core functionality of DoEvents was to allow the application to process events/messages in the message queue for the application. The events were in order, but unless the developer had planned for them, it could lead to disastrous results.

Internally, hiding the fraFuture by using the visible property would pop a WM_PAINT message onto the message queue for the application (along with the dirty region). When application code wasn’t running, Windows would process the queue (to empty), including WM_PAINTs (which were in the queue, but combined to prevent cascading updates). As I mentioned, this is a single threaded application, and processing the queue only happens when there isn’t Visual Basic code executing (VB handled this automatically).

When using DoEvents though, it would force the queue to be processed. So, the screen would update immediately (along generally with anything else that may have been queued).

So, what we’d done: introduce a flicker.

- Hide

- Repaint

- Show

- Repaint (which happened naturally by showing the new frame)

DoEvents was the source of a number of issues over the years as it “solved” problems — and created dozens of new problems.

Developers frequently neglected to handle situations where the user had interacted with the application during a busy state, DoEvents would allow those queued requests (like typing, or clicking a mouse) to process — and happen immediately, even though the normally sequential code hadn’t returned.

Sub btnPast_Click()

fraFuture.visible = True

DoEvents ☠️

fraPast.visible = False

End Sub☠️ DoEvents: Process the queue including user originated events. Had the user clicked on a button that closed the form they were using? What if something else made the fraFuture Invisible? Or maybe they clicked back on the other tab while it was busy or … INSTABILITY!!

Happy

She was happy with the result. We weren’t, but moved forward regardless. There was lots more to do.

DNK

Do you know that acronym? It was an important statistic for schedulers.